Diffy - AI Commit Messages with Context

Generate intelligent commit messages using AI (OpenAI, GitHub Copilot, or Google Gemini) from your git diff. Features intelligent codebase indexing, customizable formats, and comprehensive configuration options.

✨ Key Features

- 🤖 Multiple AI Providers: Choose between OpenAI, GitHub Copilot, or Google Gemini

- 🧠 Smart Codebase Indexing: 3 strategies (Compact, Structured, AST-based) for context-aware commits

- 📝 Flexible Formats: Conventional Commits, Gitmoji, or custom templates

- 🔍 Diff Analysis: Auto-extracts modified functions, classes, and imports

- ⚙️ Highly Configurable: 20+ settings for complete customization

- 📋 One-Click Generation: Direct SCM integration for instant commit messages

- � Smart Filtering: Auto-excludes lock files, images, and other irrelevant changes

🚀 Installation

From VS Code Marketplace (Recommended)

- Open VS Code

- Go to Extensions (

Ctrl+Shift+X / Cmd+Shift+X)

- Search for "Diffy"

- Click Install

From OpenVSX

- Visit OpenVSX

- Click Download

- Install the

.vsix file in VS Code

From Command Line

code --install-extension hitclaw.diffy-explain-ai

Requirements

- VS Code:

^1.105.0

- AI Provider: One of:

- GitHub Copilot subscription (recommended - no API key needed)

- OpenAI API key

- Google Gemini API key

🛠️ Quick Start

1. Choose Your AI Provider

GitHub Copilot (Easiest Setup)

{

"diffy-explain-ai.aiServiceProvider": "vscode-lm"

}

OpenAI

{

"diffy-explain-ai.aiServiceProvider": "openai",

"diffy-explain-ai.openAiKey": "your-api-key-here"

}

Google Gemini

{

"diffy-explain-ai.aiServiceProvider": "gemini",

"diffy-explain-ai.geminiApiKey": "your-api-key-here"

}

2. Generate Your First Commit Message

- Stage your changes:

git add .

- Open VS Code's Source Control view

- Click the "✨ Generate Commit Message" button in the commit message box

- Review and commit!

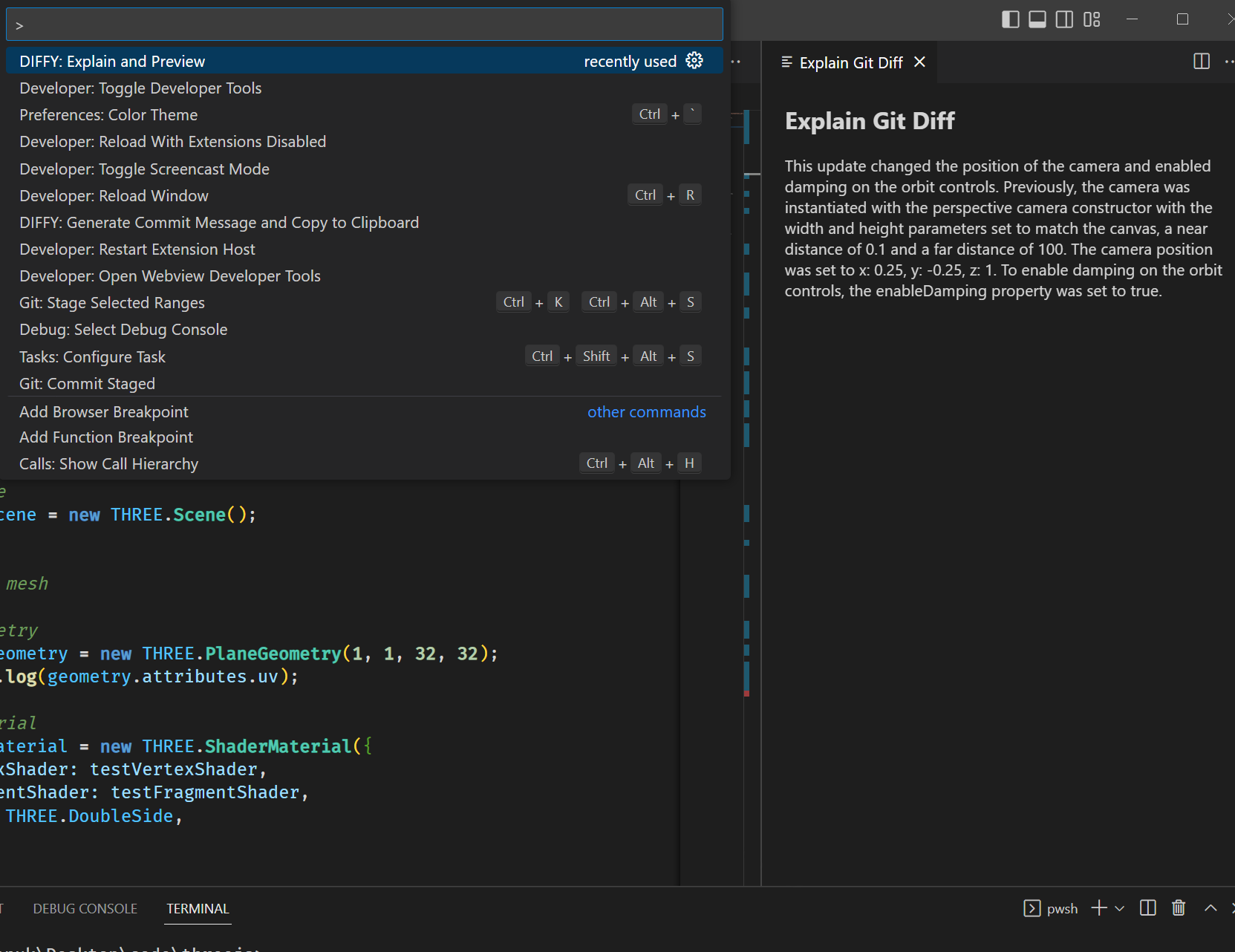

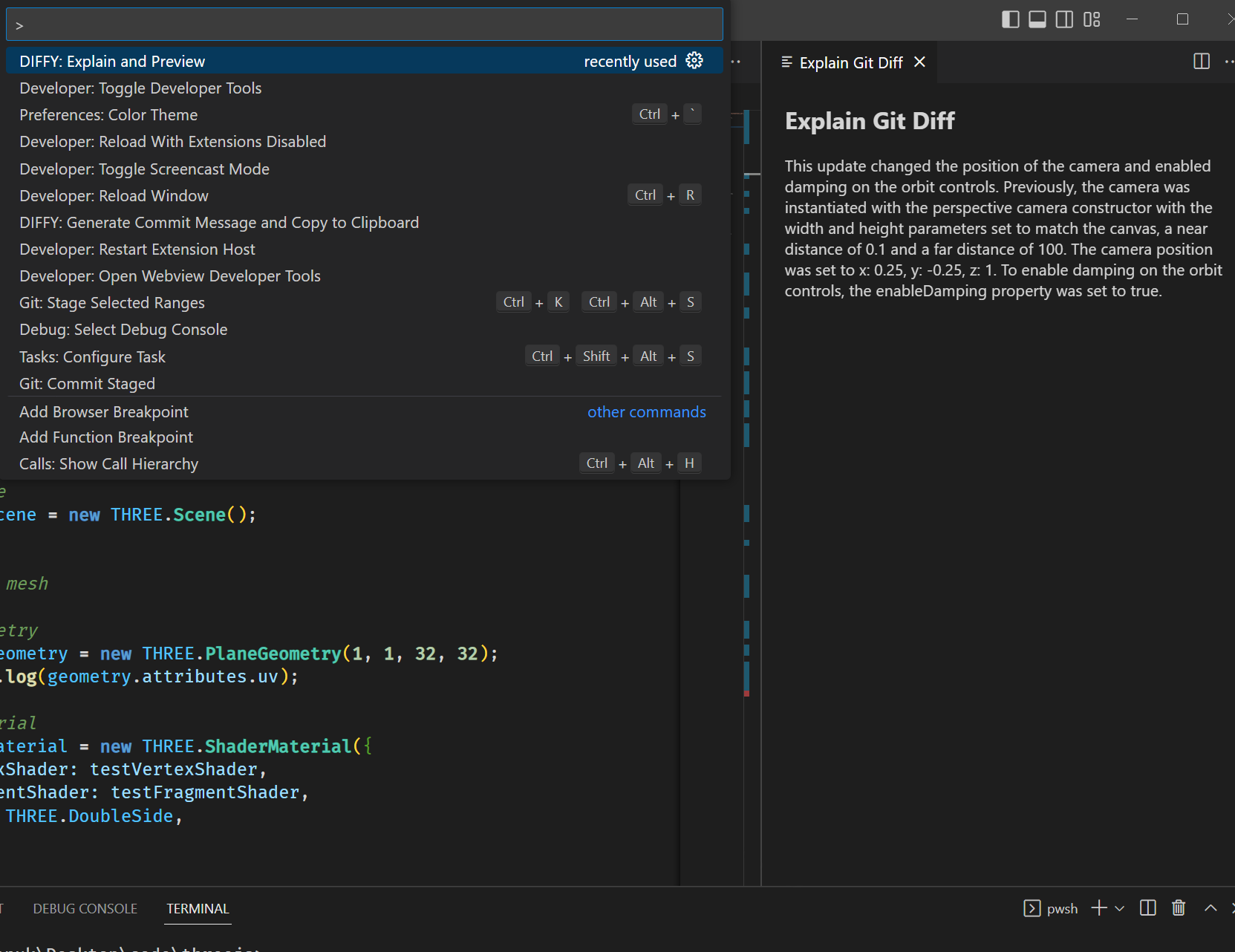

📸 Screenshots

Generate Commit Message

Explain and Preview Changes

📖 Usage

Commands

| Command |

Description |

Keyboard Shortcut |

diffy-explain-ai.generateCommitMessage |

Generate commit message to input box |

- |

diffy-explain-ai.explainDiffClipboard |

Explain changes and copy to clipboard |

- |

diffy-explain-ai.generateCommitMessageClipboard |

Generate message and copy to clipboard |

- |

diffy-explain-ai.explainAndPreview |

Explain and preview staged changes |

- |

Right-click in the Source Control panel for quick access to Diffy commands.

⚙️ Configuration

AI Provider Settings

| Setting |

Description |

Default |

aiServiceProvider |

Choose AI provider: openai, vscode-lm, gemini |

vscode-lm |

vscodeLmModel |

GitHub Copilot model selection |

auto |

openAiKey |

OpenAI API key |

- |

model |

OpenAI model (gpt-4-turbo, gpt-4, etc.) |

gpt-4-turbo |

geminiApiKey |

Google Gemini API key |

- |

geminiModel |

Gemini model selection |

gemini-2.0-flash-exp |

Commit Message Customization

| Setting |

Description |

Default |

commitMessageType |

Format: conventional, gitmoji, custom |

conventional |

maxCommitMessageLength |

Subject line character limit |

72 |

includeCommitBody |

Add detailed bullet points |

false |

additionalInstructions |

Custom AI prompt instructions |

- |

customCommitPrompt |

Full custom prompt template |

- |

File Processing

| Setting |

Description |

Default |

excludeFilesFromDiff |

Glob patterns to exclude |

package-lock.json, yarn.lock, etc. |

respectGitignore |

Honor .gitignore patterns |

true |

enableCodebaseContext |

Include project context |

false |

codebaseIndexingStrategy |

Indexing strategy: compact, structured, ast-based |

compact |

indexedFiles |

Files to analyze for context |

package.json, README.md, etc. |

maxIndexedFileSize |

Max file size for indexing (KB) |

50 |

codebaseContextTokenBudget |

Max tokens for context |

4000 |

AI Behavior

| Setting |

Description |

Default |

temperature |

AI creativity (0.0-2.0) |

0.2 |

maxTokens |

Maximum response length |

196 |

proxyUrl |

Custom proxy for OpenAI |

- |

🎯 Advanced Features

Intelligent Codebase Indexing

Diffy offers 3 indexing strategies to balance context richness with token costs:

1. Compact (Default) - Token Optimized

{

"diffy-explain-ai.enableCodebaseContext": true,

"diffy-explain-ai.codebaseIndexingStrategy": "compact"

}

- Token Usage: ~800 tokens for 4 files

- Format: Single-line summary

- Best For: Most projects, cost optimization

- Output:

PROJECT: tech-stack • available-scripts

{

"diffy-explain-ai.codebaseIndexingStrategy": "structured"

}

- Token Usage: ~2000-3000 tokens

- Format: JSON with comprehensive metadata

- Best For: Complex changes requiring detailed context

- Features:

- File-level change tracking

- Modified functions and classes

- Import/export analysis

- Breaking change detection

- Automatic diff summarization

3. AST-based - Semantic Understanding

{

"diffy-explain-ai.codebaseIndexingStrategy": "ast-based"

}

- Token Usage: ~1500 tokens (enhanced format)

- Format: File paths with token counts

- Best For: Large refactors, architectural changes

- Status: Enhanced placeholder (full AST parsing future enhancement)

{

"diffy-explain-ai.indexedFiles": [

"package.json",

"README.md",

"tsconfig.json",

"Cargo.toml",

"go.mod",

"pom.xml",

"pyproject.toml",

"composer.json"

],

"diffy-explain-ai.codebaseContextTokenBudget": 4000

}

Structured Diff Analysis (Structured Mode)

When using structured indexing, Diffy automatically analyzes your diff to extract:

- Modified Functions: Detects changed functions across JS/TS, Python, Rust, Go

- Modified Classes: Tracks class/interface/type changes

- Import Changes: Added/removed dependencies

- Export Changes: API surface modifications

- Breaking Changes: Automatic detection based on patterns

- Module Scope: File-level and function-level granularity

Example structured diff summary:

CHANGES SUMMARY:

📝 Modified 3 files (+45/-12 lines)

🔧 Functions: handleSubmit, validateInput

📦 Imports: +react-hook-form, -lodash

⚠️ Potential breaking change detected

Basic Codebase Context

Basic configuration (now enhanced with strategies above):

{

"diffy-explain-ai.enableCodebaseContext": true,

"diffy-explain-ai.indexedFiles": [

"package.json",

"README.md"

]

}

Custom Commit Templates

Create fully custom commit message templates:

{

"diffy-explain-ai.commitMessageType": "custom",

"diffy-explain-ai.customCommitPrompt": "Generate a commit message for the following git diff.\n\nRequirements:\n- Maximum subject length: {maxLength} characters\n- Use imperative mood\n- Be concise and clear{bodyInstructions}\n\nReturn ONLY the commit message, no explanations."

}

Team-Specific Instructions

Add team conventions or project-specific rules:

{

"diffy-explain-ai.additionalInstructions": "Follow team conventions:\n- Use JIRA ticket numbers in scope\n- Always include 'BREAKING CHANGE' footer when applicable\n- Mention affected microservices"

}

🔧 Development

Prerequisites

- Node.js 18+

- VS Code 1.105.0+

- TypeScript 4.9+

Setup

git clone https://github.com/Hi7cl4w/diffy-explain-ai.git

cd diffy-explain-ai

npm install

npm run compile

Testing

npm run test

Building

npm run package # Creates .vsix file

Debugging

- Open in VS Code

- Press

F5 to launch extension development host

- Test in the new window

🤝 Contributing

We welcome contributions! Please see our Contributing Guide for details.

Development Workflow

- Fork the repository

- Create a feature branch:

git checkout -b feature/amazing-feature

- Make your changes

- Run tests:

npm run test

- Lint code:

npm run lint

- Commit changes:

git commit -m 'feat: add amazing feature'

- Push to branch:

git push origin feature/amazing-feature

- Open a Pull Request

Code Quality

- TypeScript: Strict type checking

- Linting: Biome

- Testing: Mocha

- Formatting: Biome

📊 CI/CD

This project uses GitHub Actions for automated testing, linting, and releases.

🔍 Technical Stack

Architecture

- Language: TypeScript (strict mode)

- Build: Webpack 5 with esbuild loader

- Linting: Biome (replaces ESLint + Prettier)

- Testing: Mocha + VS Code Test Runner

- Bundling: Single extension.js bundle with vendor chunks

Key Services

CodebaseIndexService: Smart file indexing with 3 strategiesDiffAnalyzer: Structured git diff analysisOpenAiService, GeminiService, VsCodeLlmService: AI provider integrationsGitService: Git operations and diff managementWorkspaceService: Configuration and workspace managementLogger: Structured logging with VS Code LogOutputChannel

- File content caching with token counting

- Smart file filtering and prioritization

- Lazy service initialization

- Webpack code splitting for faster activation

🙏 Support

Getting Help

Common Issues

"No GitHub Copilot models available"

- Ensure GitHub Copilot is installed and you're signed in

- Check your Copilot subscription status

"OpenAI API key not working"

- Verify your API key is correct

- Check your OpenAI account has credits

- Ensure the key has the necessary permissions

"Extension not activating"

- Check VS Code version (^1.105.0 required)

- Try reloading VS Code:

Ctrl+Shift+P → "Developer: Reload Window"

"High token usage with indexing"

- Switch to

compact indexing strategy for lower costs

- Reduce

codebaseContextTokenBudget (default: 4000)

- Limit

indexedFiles to essential configuration files

"Missing context in commit messages"

- Enable

enableCodebaseContext setting

- Try

structured indexing for rich metadata

- Add relevant config files to

indexedFiles

📄 License

MIT License - see the LICENSE file for details.

🙌 Acknowledgments

Made with ❤️ by Hi7cl4w