GitMsgAI

Install from VS Code Marketplace →

AI-powered commit message generator for VS Code. Get perfect commits in seconds using any of 5 AI providers, 400+ models, or completely free local AI.

🚀 Why GitMsgAI?

Better than writing commits yourself:

- ✅ Consistent format across your team

- ✅ Never forget what you changed

- ✅ Follows best practices automatically

- ✅ Saves 5+ minutes per day

Better than other AI commit tools:

- ✅ 5 providers vs just 1 (most tools only support OpenAI)

- ✅ Free local models with Ollama/LM Studio (zero API costs)

- ✅ Smart caching saves 80%+ on API calls

- ✅ Enterprise security (rate limiting, sensitive file exclusion)

- ✅ 400+ models to choose from

Better pricing:

- 🆓 $0 - Use Ollama or LM Studio (completely free forever)

- 💵 $0.05/1M tokens - GPT-5 Nano (latest GPT model)

- 🎁 Free tier - OpenRouter Gemini (no credit card needed)

⚡ Quick Start (30 seconds)

Option 1: Free Tier (No Credit Card Required)

- Install extension

- Click the 🤖 robot icon in your Git panel

- Extension will prompt for API key → Get free key from OpenRouter

- Paste key → Done! Uses free Gemini model by default

Option 2: Completely Free Forever (Local AI)

- Install Ollama (takes 2 minutes)

- Run:

ollama pull qwen2.5-coder

- Install extension

- Click 🤖 robot icon → Select "Local" provider → Done!

No API key, no credit card, no tracking. Just works.

✨ Features

🎯 Core Features

- AI-Powered Commit Messages: Uses AI to analyze your changes and generate meaningful commit messages

- Multi-Provider Support: Choose from 5 AI providers (OpenRouter, OpenAI, Google, Claude, Local)

- Interactive Model Picker: Browse and select from 400+ AI models with pricing and context info

- Conventional Commits Support: Automatically follows conventional commit format with configurable types and scopes

- Smart Caching: Reuses recent commit messages for identical changes to save API costs

- Review Mode: Preview and approve commit messages before applying

- Customizable: Configure AI model, prompt template, and conventional commit rules

🔒 Security Features

- SecretStorage API for secure API key management (OS-level encryption)

- Rate limiting to prevent API abuse

- Sensitive file exclusion patterns

- Input validation and sanitization

- User consent warnings for external API usage

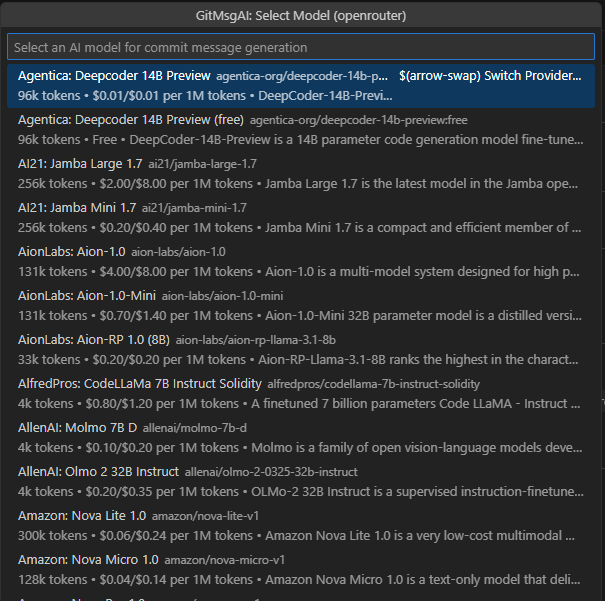

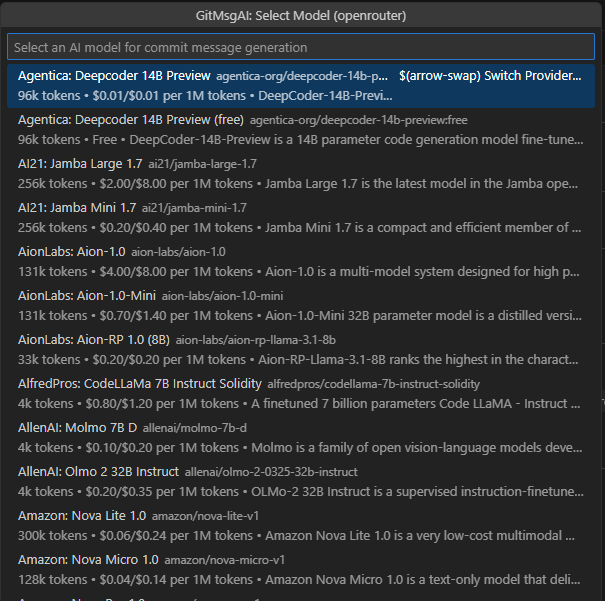

Model Selection

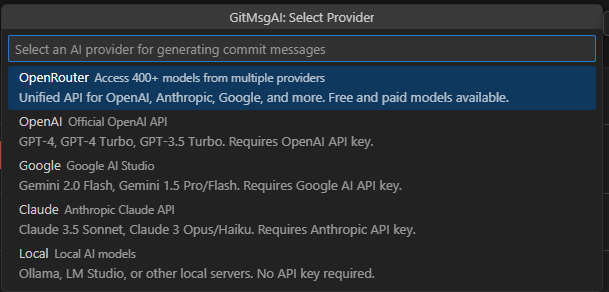

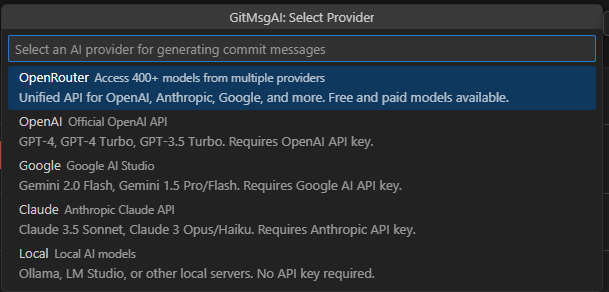

Provider Selection

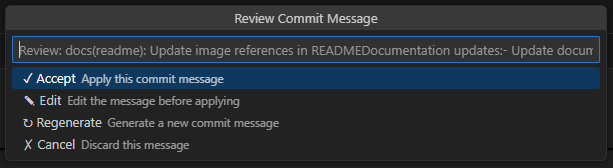

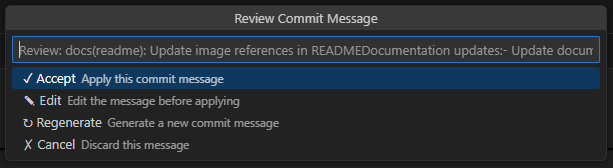

Review Mode

Multi-Provider Support

GitMsgAI supports 5 different AI providers, giving you the flexibility to choose the best option for your workflow:

Supported Providers

| Provider |

API Key Required |

Model Selection |

Best For |

| OpenRouter |

Yes |

400+ models from multiple providers |

Maximum flexibility, one API for many models |

| OpenAI |

Yes |

GPT-5 Nano (latest, most efficient) |

Cutting-edge performance |

| Google |

Yes |

Gemini 2.5 Flash Lite Preview (newest) |

Latest model, best pricing |

| Claude |

Yes |

Sonnet 4.5 (current flagship) |

Top-tier coding and reasoning |

| Local |

No |

GPT-OSS-20B, Qwen 2.5 Coder (Free) |

Privacy, no API costs, offline use |

Quick Setup by Provider

OpenRouter (Default)

- Get your API key from OpenRouter

- Run:

GitMsgAI: Set API Key

- Browse 400+ models with

GitMsgAI: Select Model

- Best for: Access to many models through one API

OpenAI

- Get your API key from OpenAI Platform

- Run:

GitMsgAI: Select Provider → Choose "OpenAI"

- Run:

GitMsgAI: Set API Key

- Best for: Enterprise use, consistent quality

Google (Gemini)

- Get your API key from Google AI Studio

- Run:

GitMsgAI: Select Provider → Choose "Google"

- Run:

GitMsgAI: Set API Key

- Best for: Large diffs, free tier available

Claude (Anthropic)

- Get your API key from Anthropic Console

- Run:

GitMsgAI: Select Provider → Choose "Claude"

- Run:

GitMsgAI: Set API Key

- Best for: Natural commit messages, code understanding

Local Models (Ollama/LM Studio)

- Install Ollama or LM Studio

- Download a model (recommended:

qwen2.5-coder:latest, gpt-oss-20b, codellama)

- Run:

GitMsgAI: Select Provider → Choose "Local"

- Set your base URL (default:

http://localhost:11434/v1 for Ollama)

- Best for: Privacy, no API costs, offline use

Switching Providers

You can easily switch between providers at any time:

- Open Command Palette (Ctrl+Shift+P / Cmd+Shift+P)

- Run:

GitMsgAI: Select Provider

- Choose your desired provider

- Set the API key if needed (not required for Local)

- Select a model for the new provider

Each provider maintains its own API key and model selection, so you can switch back and forth without reconfiguring.

For detailed setup instructions and troubleshooting, see docs/PROVIDERS.md.

Requirements

You need an API key from one of the supported providers (OpenRouter, OpenAI, Google, Claude) or a local AI setup (Ollama/LM Studio). See the Multi-Provider Support section for details on each provider.

Setup

First-Time Setup

Install the extension from the VS Code Marketplace

Select your AI provider (optional - defaults to OpenRouter):

- Open Command Palette (Ctrl+Shift+P / Cmd+Shift+P)

- Run:

GitMsgAI: Select Provider

- Choose from: OpenRouter, OpenAI, Google, Claude, or Local

- See Multi-Provider Support for provider details

Set up your API key:

- Open Command Palette (Ctrl+Shift+P / Cmd+Shift+P)

- Run:

GitMsgAI: Set API Key

- Enter your API key for the selected provider

- Your key will be securely stored using VS Code's SecretStorage API

- Note: Not required for Local provider (Ollama/LM Studio)

Select your AI model:

- Open Command Palette (Ctrl+Shift+P / Cmd+Shift+P)

- Run:

GitMsgAI: Select Model

- Browse available models for your selected provider

- Select your preferred model

Configure your preferences (optional):

- Customize the prompt template

- Configure conventional commit types and scopes

Selecting a Model

The extension includes an interactive model picker that shows available models for your selected provider:

How to use:

- Command Palette →

GitMsgAI: Select Model

- Browse the list with search/filter capabilities

- View model details (varies by provider):

- Context length (e.g., "2M tokens" for large context windows)

- Pricing (cost per million tokens, when available)

- Description (model capabilities and characteristics)

- Select a model to automatically update your configuration

Provider-specific notes:

- OpenRouter: Browse 400+ models with auto-updating list (every 24 hours by default)

- OpenAI: See GPT-4, GPT-3.5 Turbo, and other OpenAI models

- Google: Choose from Gemini Pro, Flash, and other Google models

- Claude: Select from Claude 3.5 Sonnet, Opus, Haiku, and other versions

- Local: Pick from common Ollama/LM Studio models or enter a custom model name

Recommended Models by Provider (Cheapest First):

| Provider |

Model |

Pricing (Input/Output per 1M tokens) |

Context |

Best For |

| Local (LM Studio) |

openai/gpt-oss-20b |

Free |

32k tokens |

Privacy, offline, no API costs |

| Local (Ollama) |

qwen2.5-coder:latest |

Free |

32k tokens |

Code-focused, completely free |

| OpenRouter |

google/gemini-2.0-flash-exp:free |

Free |

1M tokens |

Free tier, large context |

| OpenAI |

gpt-5-nano |

$0.05 / $0.40 |

128k tokens |

Latest GPT-5, ultra-efficient |

| Google |

gemini-2.5-flash-lite-preview |

$0.10 / $0.40 |

2M tokens |

Latest Gemini, advanced reasoning |

| Claude |

claude-3-5-haiku-20241022 |

$0.80 / $4.00 |

200k tokens |

Budget-friendly Claude |

| Claude |

claude-sonnet-4-5-20250929 |

$3.00 / $15.00 |

200k tokens |

Latest flagship, agentic coding |

| OpenAI |

gpt-5-mini |

$0.25 / $2.00 |

128k tokens |

Balanced GPT-5 performance |

| Google |

gemini-2.5-flash-preview |

$0.30 / $1.20 |

2M tokens |

Full Gemini 2.5 with thinking |

| Claude |

claude-opus-4-1-20250514 |

$15.00 / $75.00 |

200k tokens |

Maximum intelligence |

💡 Cost-saving tips:

- Best value: Use Local models (Ollama/LM Studio) with GPT-OSS-20B for completely free commit messages

- Cheapest API: OpenAI GPT-5 Nano at $0.05/$0.40 per 1M tokens (latest generation)

- Free tier: OpenRouter's

google/gemini-2.0-flash-exp:free or Google AI Studio's free quota

- Latest tech: GPT-5 Nano ($0.05/$0.40) and Gemini 2.5 Flash Lite ($0.10/$0.40) offer cutting-edge performance at low cost

- For quality: Claude Sonnet 4.5 ($3/$15) is the best coding model for agentic workflows

- OpenRouter advantage: Access 400+ models with live pricing through one API - prices shown are what you pay (no markup)

Usage

Basic Usage

- Make changes to your code

- Stage your changes in Git (using Source Control panel)

- Click the robot head icon (🤖) in the Source Control message input box or title bar

- The AI will analyze your changes and generate a commit message

- Edit the message if needed and commit as usual

Using Cache

When you generate a commit message for the same set of changes:

- The extension checks if a cached message exists

- If found, you'll see: "Found cached suggestion from X minutes ago"

- Choose:

- Use Cached - Apply the previously generated message (no API call)

- Generate New - Create a fresh message (uses API)

- Dismiss - Cancel the operation

To clear the cache:

- Command Palette →

GitMsgAI: Clear Cache

Review Mode

Enable review mode to preview messages before applying:

{

"gitmsgai.reviewBeforeApply": true

}

With review mode enabled:

- Generate a commit message

- Review the suggested message in a quick pick dialog

- Choose to accept, edit, or regenerate

Using Conventional Commits

The extension automatically generates conventional commit messages. Example output:

feat: add user authentication

fix(api): handle null response from endpoint

docs: update installation instructions

refactor(utils): simplify date formatting logic

Configure conventional commits:

{

"gitmsgai.conventionalCommits.enabled": true,

"gitmsgai.conventionalCommits.types": ["feat", "fix", "docs", "style", "refactor", "test", "chore"],

"gitmsgai.conventionalCommits.enableScopeDetection": true

}

Extension Settings

Core Settings

| Setting |

Type |

Default |

Description |

gitmsgai.provider |

string |

openrouter |

AI provider (openrouter, openai, google, claude, local) |

gitmsgai.openrouter.model |

string |

google/gemini-2.0-flash-exp:free |

OpenRouter model (free tier recommended) |

gitmsgai.openai.model |

string |

gpt-5-nano |

OpenAI model |

gitmsgai.google.model |

string |

gemini-2.5-flash-lite-preview |

Google Gemini model |

gitmsgai.claude.model |

string |

claude-sonnet-4-5-20250929 |

Claude model |

gitmsgai.local.model |

string |

openai/gpt-oss-20b |

Local model (Ollama/LM Studio) |

gitmsgai.prompt |

string |

(see below) |

Custom prompt template (use {changes} placeholder) |

gitmsgai.timeout |

number |

30 |

API request timeout in seconds |

Security & Privacy Settings

| Setting |

Type |

Default |

Description |

gitmsgai.rateLimitPerMinute |

number |

10 |

Maximum API requests per minute |

gitmsgai.showConsentWarning |

boolean |

true |

Show warning about external API usage |

gitmsgai.excludePatterns |

array |

[".env*", "*.key", ...] |

Glob patterns for sensitive files to exclude from diffs |

gitmsgai.warnOnSensitiveFiles |

boolean |

true |

Show warning when sensitive files are excluded |

Feature Settings

| Setting |

Type |

Default |

Description |

gitmsgai.reviewBeforeApply |

boolean |

true |

Review messages before applying |

gitmsgai.enableCache |

boolean |

true |

Enable commit message caching |

gitmsgai.cacheSize |

number |

10 |

Maximum cached messages |

gitmsgai.autoUpdateModels |

boolean |

true |

Automatically update models list from OpenRouter |

gitmsgai.modelsUpdateInterval |

number |

24 |

How often to update models list (in hours) |

Conventional Commits Settings

| Setting |

Type |

Default |

Description |

gitmsgai.conventionalCommits.enabled |

boolean |

true |

Enable conventional commits support |

gitmsgai.conventionalCommits.types |

array |

["feat", "fix", "docs", ...] |

Allowed commit types |

gitmsgai.conventionalCommits.scopes |

array |

[] |

Allowed scopes (empty = any) |

gitmsgai.conventionalCommits.enableScopeDetection |

boolean |

true |

Auto-detect scope from file paths |

gitmsgai.conventionalCommits.requireScope |

boolean |

false |

Require scope in messages |

Default Prompt Template

The default prompt generates conventional commit messages:

Given these staged changes:

{changes}

Generate a commit message that follows these rules:

1. Start with a type (feat/fix/docs)

2. Keep it under 50 characters

3. Use imperative mood

You can customize this in settings to match your team's commit message style.

Security Features

Secure API Key Storage

Your OpenRouter API key is stored securely using VS Code's SecretStorage API:

- Encrypted Storage: Keys are encrypted at the OS level

- No Plain Text: Keys never appear in settings.json

- Cross-Platform: Works on Windows (Credential Manager), macOS (Keychain), Linux (Secret Service)

Rate Limiting

Protects against accidental API abuse:

- Default: 10 requests per minute

- Configurable via

gitmsgai.rateLimitPerMinute

- Shows clear error when limit reached with countdown timer

All data is validated before processing:

- API responses are validated for correct structure

- Commit messages are sanitized to remove dangerous characters

- Error messages are scrubbed to prevent leaking sensitive data

User Consent

On first use, the extension shows a consent dialog explaining:

- What data is sent to OpenRouter (git diff)

- How to disable the warning

- Privacy implications

Privacy & Security

What Data is Sent to AI Providers

When you generate a commit message, the extension sends data to your selected AI provider:

- Git diff of your staged changes (code differences only)

- Your prompt template with the diff substituted

- No personal information (name, email, etc.)

- No API keys or secrets (filtered out by default)

Note for Local provider: When using Ollama or LM Studio, all data stays on your machine. Nothing is sent to external servers.

Example of what gets sent:

Given these staged changes:

diff --git a/src/app.ts b/src/app.ts

index 123..456

--- a/src/app.ts

+++ b/src/app.ts

@@ -10,3 +10,4 @@

-console.log('old');

+console.log('new');

Generate a commit message that follows these rules:

1. Start with a type (feat/fix/docs)

2. Keep it under 50 characters

3. Use imperative mood

What Data is Stored Locally

- API Keys: Encrypted in OS-level secure storage (SecretStorage) - one per provider

- Provider Settings: Selected provider, models, and base URLs in VS Code settings

- Cache: Recent commit messages stored in VS Code workspace state

- Settings: User preferences in VS Code settings

- Consent Flag: Whether you've acknowledged the data privacy warning

Sensitive File Protection

By default, certain files are excluded from being sent to the AI:

.env* files (environment variables)*.key, *.pem, *.p12, *.pfx (certificate files)credentials.json (credential files)secrets.*, *.secret (secret files)- Files in

**/secrets/** directories

If sensitive files are detected in your staged changes, you'll see a warning.

Advanced Configuration

Custom Prompt for Team Conventions

Example: Jira ticket integration

{

"gitmsgai.prompt": "Given these staged changes:\n{changes}\n\nGenerate a commit message in this format:\n[JIRA-XXX] Brief description\n\nDetailed explanation of changes."

}

Fine-tune Rate Limiting

For CI/CD or bulk operations:

{

"gitmsgai.rateLimitPerMinute": 20

}

Aggressive Caching

Reduce API costs by increasing cache size:

{

"gitmsgai.enableCache": true,

"gitmsgai.cacheSize": 50

}

Custom Conventional Commit Types

For monorepos or specific workflows:

{

"gitmsgai.conventionalCommits.types": [

"feat",

"fix",

"docs",

"build",

"ci",

"perf",

"hotfix"

],

"gitmsgai.conventionalCommits.scopes": [

"api",

"ui",

"auth",

"db"

],

"gitmsgai.conventionalCommits.requireScope": true

}

Commands

| Command |

Description |

GitMsgAI: Generate Commit Message |

Generate a commit message for staged changes |

GitMsgAI: Select Provider |

Choose your AI provider (OpenRouter, OpenAI, Google, Claude, Local) |

GitMsgAI: Set API Key |

Securely store your API key for the selected provider |

GitMsgAI: Select Model |

Browse and select from available models for your provider |

GitMsgAI: Test Provider Connection |

Test your provider configuration and API key |

GitMsgAI: Clear Cache |

Clear all cached commit messages |

Troubleshooting

"Please set your API key"

- Run

GitMsgAI: Set API Key command

- Make sure you've selected the correct provider with

GitMsgAI: Select Provider

- Verify you have an API key from your selected provider

- Note: Local provider (Ollama/LM Studio) does not require an API key

"Failed to generate commit message"

- Run

GitMsgAI: Test Provider Connection to diagnose the issue

- Check your internet connection (not needed for Local provider)

- Verify your API key is valid:

- For Local provider: Ensure Ollama/LM Studio is running

- Increase

gitmsgai.timeout if requests are timing out

"Rate limit exceeded"

- Wait for the cooldown period (shown in error message)

- Or increase

gitmsgai.rateLimitPerMinute

Provider-specific issues

OpenRouter: Check openrouter.ai/status for outages

OpenAI: Verify you have credits/billing set up at platform.openai.com

Google: Ensure API is enabled in your Google Cloud project

Claude: Check your usage limits at console.anthropic.com

Local: Ensure Ollama/LM Studio is running and the model is downloaded

Cache not working

- Ensure

gitmsgai.enableCache is true

- Cache only works for identical diffs

- Run

GitMsgAI: Clear Cache to reset

For more detailed troubleshooting, see docs/PROVIDERS.md.

Development

Want to contribute? See development setup:

- Clone the repository

- Run

npm install

- Open in VS Code

- Press F5 to start debugging

- Make your changes and submit a PR

Privacy Policy

This extension:

- Only sends git diffs to your selected AI provider when you click the generate button

- Stores your API keys securely using OS-level encryption (one per provider)

- Supports fully local AI models (Ollama/LM Studio) for complete privacy

- Does not collect telemetry or analytics

- Does not track your usage

- Open source - audit the code yourself!

Privacy by Provider:

- OpenRouter, OpenAI, Google, Claude: Data sent to external APIs (see their privacy policies)

- Local (Ollama/LM Studio): All data stays on your machine, completely private

License

This project is licensed under the MIT License - see the LICENSE file for details.

Support