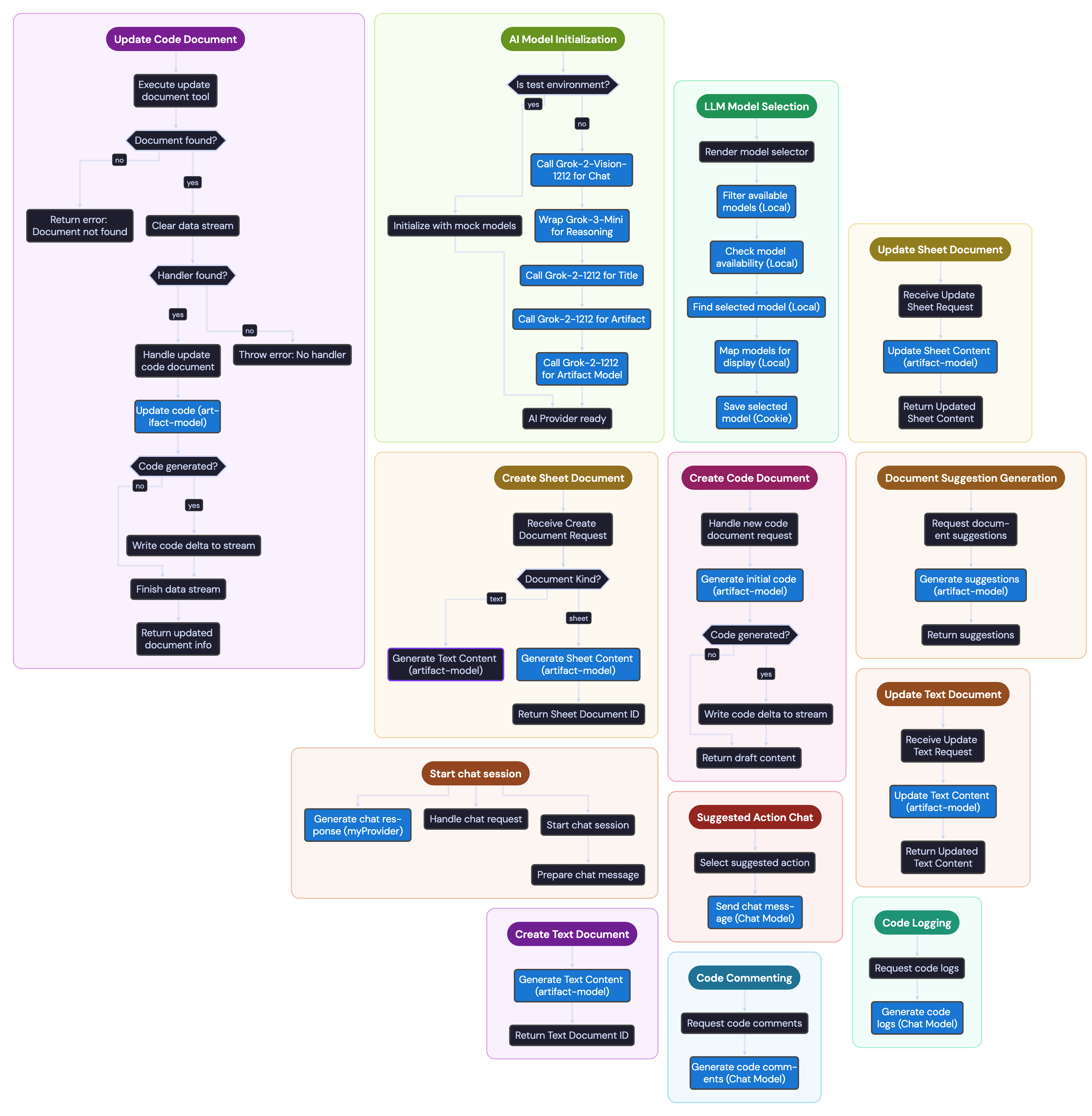

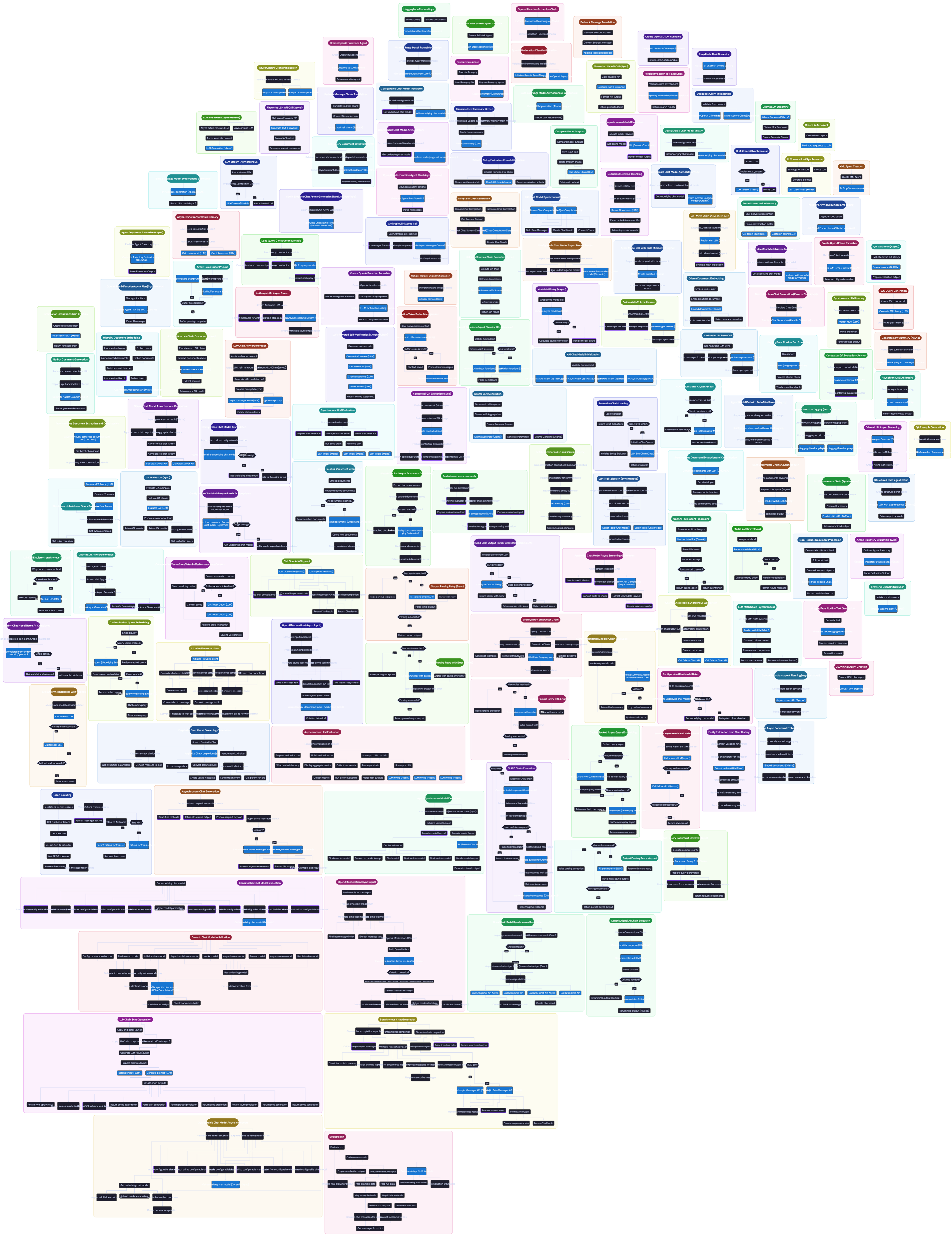

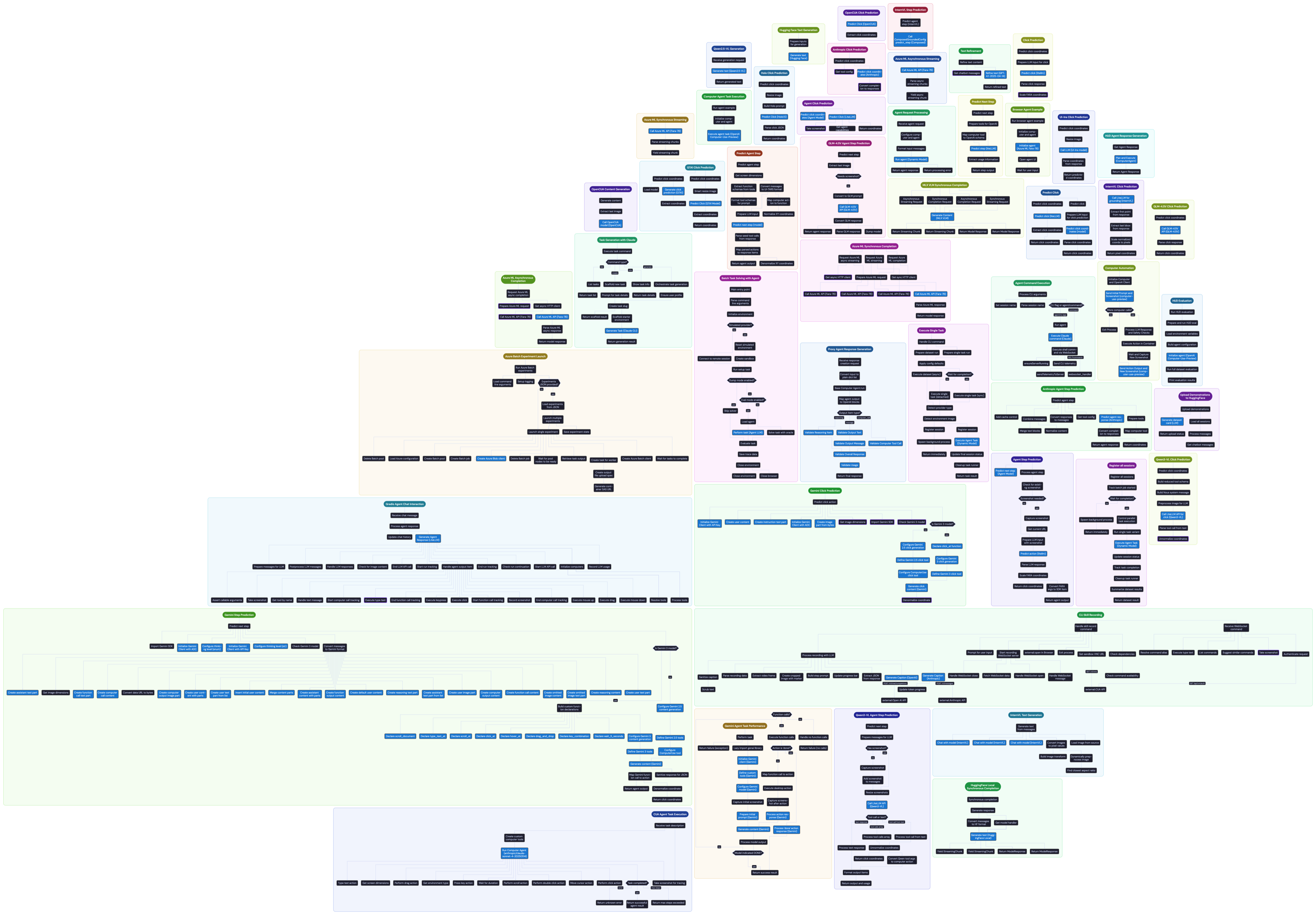

CodagSee how your AI code actually works. Codag analyzes your code for LLM API calls and AI frameworks, then generates interactive workflow graphs — directly inside VSCode.

GalleryWhy Codag?AI codebases are hard to reason about. LLM calls are scattered across files, chained through functions, and wrapped in framework abstractions. Codag does this automatically:

Features

Supported ProvidersLLM Providers: OpenAI, Anthropic, Google Gemini, Azure OpenAI, Vertex AI, AWS Bedrock, Mistral, xAI (Grok), Cohere, Ollama, Together AI, Replicate, Fireworks AI, AI21, DeepSeek, OpenRouter, Groq, Hugging Face Frameworks: LangChain, LangGraph, Mastra, CrewAI, LlamaIndex, AutoGen, Haystack, Semantic Kernel, Pydantic AI, Instructor AI Services: ElevenLabs, RunwayML, Stability AI, D-ID, HeyGen, and more IDE APIs: VS Code Language Model API Languages: Python, TypeScript, JavaScript (JSX/TSX), Go, Rust, Java, C, C++, Swift, Lua Getting Started1. Start the BackendCodag uses a self-hosted backend powered by Gemini 2.5 Flash. You'll need a Gemini API key (free tier available). Verify it's running: See the full setup guide for manual installation. 2. Use It

Settings

License |